Fine-tuning a language model on my blog posts

April 8, 2024I've been wanting to try fine-tuning a language model on my own writing, I don't have a whole lot of it yet, but according to OpenAI's Fine Tuning guide, you can try with as few as 10 examples, and anywhere from 50 to 100 gives decent results.

I have 45 posts on 52weeks (my blog of creative projects from 2023), all in an identical structured format, so I thought I'd give it a shot.

First, I needed to prepare my content into the JSONL format, like this:

{"messages": [

{"role": "system",

"content": "Marv is a factual chatbot that is also sarcastic."},

{"role": "user",

"content": "What's the capital of France?"},

{"role": "assistant",

"content": "Paris, as if everyone doesn't know that already."}

]}I found a Python library, Substack2Markdown to scrape all my Substack posts into Markdown, and then got Claude 3 to write me a script to convert that to JSONL in a single file.

Here's a snippet of one line:

{"messages": [

{"role": "system",

"content": "You are a blog post generator."},

{"role": "user",

"content": "Week 4 - Bubblopolis Returns + Stencil Art"},

{"role": "assistant",

"content": "# Week 4 - Bubblopolis Returns + Stencil Art\n\n**Feb 03, 2023**\n\n**Likes:** Share\n\nThis week I did two things - because why not? …There was a lot of extraneous HTML in my posts which I should have cleaned up, I'll show why that was an issue in a minute.

Next, I ran my through the provided validator notebook:

Num examples missing system message: 0

Num examples missing user message: 0

> Distribution of num_messages_per_example:

min / max: 3, 3

mean / median: 3.0, 3.0

p5 / p95: 3.0, 3.0

> Distribution of num_total_tokens_per_example:

min / max: 91, 4649

mean / median: 2280.4666666666667, 2192.0

p5 / p95: 1153.4, 4087.2

> Distribution of num_assistant_tokens_per_example:

min / max: 63, 4620

mean / median: 2249.5555555555557, 2161.0

p5 / p95: 1123.6, 4054.6

4 examples may be over the 4096 token limit, they will be truncated during fine-tuningAlmost all my blog posts fit neatly within the 4096 token limit, which is great. The notebook also has a cost estimator:

Dataset has ~101339 tokens that will be charged for during training

By default, you'll train for 3 epochs on this dataset

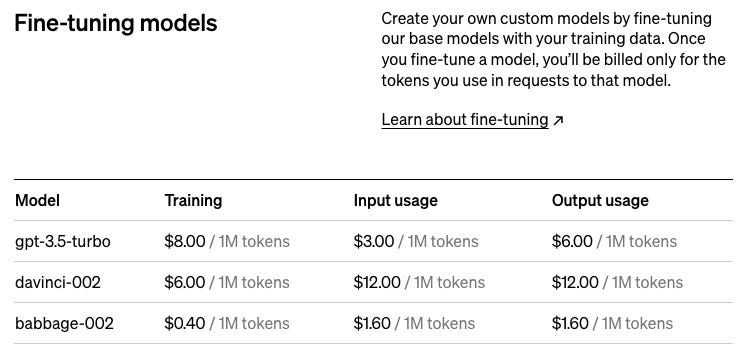

By default, you'll be charged for ~304017 tokensChecking that against the pricing page:

300,000 tokens, training using gpt-3.5-turbo, should cost 0.3M/1M * $8.00 = $2.40. Not too bad!

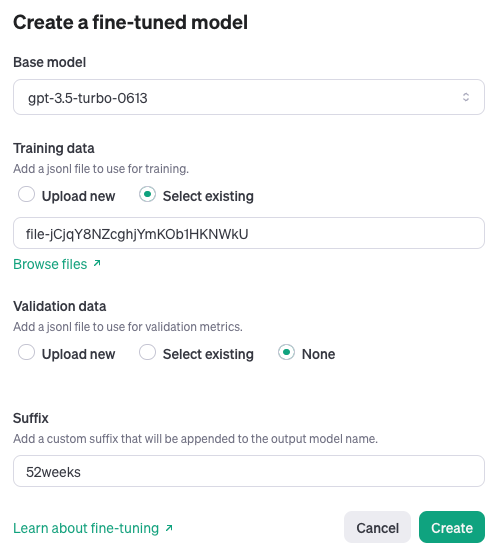

With that, I uploaded my model (I didn't upload a validation set as suggested)

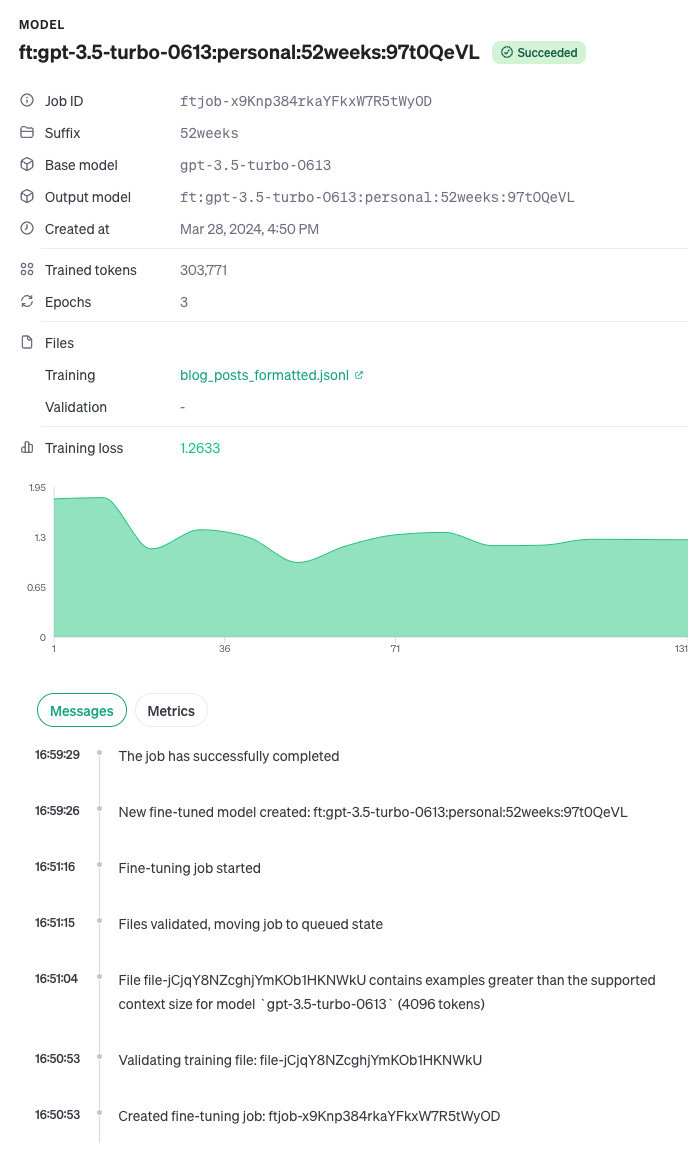

And started fine-tuning. It took about 9 minutes:

So, how does it do? The question-answer format is that I provide the post title, and it will write out a blog post.

I thought I'd prompt it with a few ideas from the backlog that I didn't get to do last year and see what it comes up with. Note that it includes hallucinated links, it also contained hallucinated embedded images that I removed, but that ate up lots of tokens when it generated responses. If I fine tune a model again, I'll make sure to spend more time cleaning the training data to remove HTML and links.

So here's some blog posts I generated with my tuned model via the Playground:

Internal currency for a party

Our last few parties have had a communal currency that guests could use to buy food and drinks. I wanted to create a more fun and interactive version for our next party, so I created this set of coins:

I designed them in Illustrator and then used a laser cutter to cut them out of 3mm birch plywood. I was really happy with how they came out, and they were a big hit at the party.

We gave each guest 5 coins when they arrived, and then they could trade with each other or use them to buy drinks or food. I printed out a menu with a list of things they could buy, and each item had a price in coins:

I had a few goals with this: I wanted to create a way for people to interact and trade with each other, and to give them a reason to talk to people they didn’t already know. I also wanted to create a sense of scarcity, so that people would have to prioritize which items they wanted to buy. And finally, I wanted to encourage people to explore the space and discover the different food and drink stations.

The currency was a big success, and I saw lots of people trading and negotiating with each other. It was also fun to see how different people valued different items - some people were very focused on getting a particular food item, while others were more interested in the drinks. Some people ran out of coins and had to trade or beg for more, while others ended up with a surplus and were giving them away. It created an interesting dynamic and added another layer of fun to the party.

Here are a few things I’d do differently next time:

- I pre-cut the coins, but I ran out of time to sand and varnish them, so they were a bit rough around the edges. Next time I’d give myself more time to finish them off.

- The menu was quite long and had a lot of items. This was partly because we had a lot of food and drink stations. But it also made it harder for people to choose what to buy, and harder to remember the prices. Next time I’d try to simplify the menu and have fewer items.

- I was a bit too conservative in the amount of coins I gave each guest. Five coins didn’t go very far, and I saw some people run out quite quickly. I’d probably double it next time.

Overall, this was a really fun project and added a lot to the party. I’m looking forward to iterating on it and trying out some new ideas for the next one.

Print a huge QR code and paste it on roof of abandoned building

I saw a huge QR code on a building in Tokyo and thought it was cool, and I could do the same. So I did.

Tools I used

- QR code generator

- Affordable printers

- A friend and I to carry it

- Ladders

- Wheatpaste

- A roof

Context

I was walking around in Tokyo on a beautiful day, and stumbled upon this huge QR code on the side of a building. It reminded me that I had wanted to print a huge QR code for a while, and this was a sign to do it.

Process

I found a QR code generator, and generated a big QR code with a link to this blog post.

Next I had to print it. The biggest printer I have is A4, so I had to tile the image. I used the RonyaSoft Poster Printer software to do this, and then printed out 12 A4 pages.

Next a friend and I had to figure out how to paste it up. We decided to do it on top of a building near my house. First we had to get on the roof. We found a ladder and climbed up.

Climbing up

Once on the roof, we had to figure out how to get the paper to stick. We looked around and found some wheatpaste on the ground, which is made by mixing flour and water. We found a nearby tap and a bucket, and made our own.

We used the bucket to carry the paste up the ladder, and then a brush to apply it to the roof. We had to work quickly before the paste started to dry.

Applying the paste

Unfortunately the paste was not sticky enough, and the wind was blowing the pages around before I could apply them. We tried a few different methods, but nothing worked.

We decided to abandon the mission, and climbed back down.

Learnings

- I should have tested the paste before going on the roof

- Sometimes things just don’t work out

Next steps

- Find a better way to paste the paper on the roof, or try it on a different surface

- Use a more durable material, like vinyl stickers or vinyl tape, instead of paper

- Try it at night to avoid wind

- Get a drone to help apply the paste

Muse headband - Control music with thoughts

I’ve been using the Muse headband to control my music player with my thoughts.

Tools

Context

I bought the Muse headband a few years ago with the intention of using it for meditation. At the time I found it difficult to fit it into my routine, and I didn’t find the feedback very useful. Recently I saw a Hacker News post about someone using the Muse to control a Wheelchair with their thoughts. I decided to give it another shot to see if I could use it to control my music player.

Process

- I had to install the Muse Direct app on my phone to connect to the Muse headband. I had some trouble with this, and had to use a workaround that involved connecting to my Muse via Bluetooth settings in my phone, and then using the Muse Direct app to calibrate the sensors.

- I then used OSCulator to convert the raw Muse sensor data into MIDI. I followed this guide to get things working, but had to do a few things differently for my particular setup.

- I had to set the OSCulator settings to connect to

localhostinstead of127.0.0.1, as per this guide - I added an “OSC Route” to the “OSC Routing” tab, and set it to

/muse/elements/experimental/concentrationand/muse/elements/experimental/mellowto the MIDI CC numbers I wanted (and set the range to 0-127)

- I had to set the OSCulator settings to connect to

- I then connected the MIDI output of OSCulator to my Arturia MicroFreak, and set the MicroFreak to receive CC messages on the two numbers I had chosen

- I then connected the MicroFreak to my audio interface (a Behringer UMC404HD in my case) via USB, and then connected the audio interface to my computer via USB

- I opened Ableton and set the input and output to the Behringer audio interface, and added a new MIDI track that used the MicroFreak as the input device

- I then used LoopMIDI to create a virtual MIDI port, and selected that as the output for the MIDI track

Learnings

- It’s fun to control things with my mind

- I need to concentrate to get the volume to go up, which means I can’t concentrate on the music at the same time. I’m not sure yet how much I like this tradeoff

- The Muse headband is not super comfortable to wear for extended periods

- I had a lot of fun piecing together a bunch of different tools and settings to get this working

- I’m excited to keep experimenting with this setup and see what else I can do with it

Next steps

- I’d like to try controlling things other than music with my mind

- I’d like to see if I can get some other Muse sensors working (eg. heart rate, breath rate, etc) to control other things

- I’d like to see if I can use the Muse to control a video game

- I’d like to see if I can get the Muse working on my computer instead of my phone, so I don’t need to use the phone as a middleman

- I’d like to see if I can get the Muse working without needing a bunch of other tools (eg. can I go from the Muse to the computer to control audio, without needing the phone, OSCulator, virtual MIDI ports, virtual audio ports, etc)

- I then used LoopBe1 to create a virtual audio port, and selected that as the input for the audio track

- I then created a new audio track that used the virtual audio port as the input, and set the output to my headphones

- I played some music on Spotify, and was able to control the volume of the music by concentrating and mellowing

A children's book

I wanted to make a fun and silly children's book, so I did. I wrote the story and my friend Aurélie did the illustrations. Here it is, in all its glory:

I printed a bunch of copies and gave them to some friends with kids, they loved it! I also read it to a 3 year old and a 5 year old, they seemed to enjoy it too. Let me know if you read it to any kids and what they thought.

Process

I had the idea for the book a few weeks ago and wrote the story in a couple of hours. I came up with the idea for the story while I was out walking and talking with a friend. We were discussing how AI can generate text and images, and I wondered if AI could do the same for a children's book. I had a few ideas for the story, so I quickly wrote them down and then later expanded on one of them.

I wrote the story in a Google Doc, here's the first draft:

I wanted to add a few more pages to the book, but I was getting tired of editing and formatting in Google Docs, so I decided to try out Storybook, a tool for designing and prototyping UI components. I created a few pages and started copying and pasting my text and images into it. It was much easier to see how the book was coming together and make changes.

I was happy with the text and layout, so I exported the pages as PNGs and then imported them into Canva. I chose Canva because it has a lot of tools for designing books, and it has a good integration with Canva Print, which I wanted to use to print the books.

I created a cover and back page, added the text and images, and then exported the whole thing as a PDF.

I ordered a few copies to see how they turned out. They looked great! I was excited to share them with people. But first I wanted to add a few more things to the book. I wanted to add a page at the end that had a space for kids to draw their own monster, and I also wanted to add a few pages at the beginning to give people a bit more info about how the book was made and how to get in touch with me.

I added the extra pages to Canva, updated the layout, and exported a new PDF. I ordered another batch of books and they looked even better:

I was happy with the book, so now I needed to share it with the world. I decided to use Substack to host a landing page and to collect email addresses. I created a new post and copied the book description and a few images into it.

I also wanted to add a link to download the PDF. I could have just uploaded the PDF to Substack and linked to it, but I wanted to track how many people were downloading it, so I created a Gatsby site and uploaded the PDF there. I then added the Gatsby link to the Substack post.

I then set up Google Analytics to track how many people were visiting the site, and added a Mailchimp form to collect email addresses.

I then recorded a Loom video of me reading the book, and uploaded that to YouTube. I added the YouTube link to the Substack post.

I decided to buy a domain name so I could have a shorter and more memorable link to share. I bought www.monstermonsters.com (it was only $12 for the year!). I then added the custom domain to Substack and set up a Calendly link so people could schedule a time to chat with me if they wanted to.

I then recorded another Loom video of me reading the book, this time with a Loom link that had the custom domain. I added that link to the Substack post.

I was finally ready to share the book with the world. I sent the link to a few friends and posted it on a few online communities. I was excited to see how many people would visit the site and download the book.

Fine-tuned GPT model on my blog posts

I recently migrated my blog from Substack to Notion and in the process I lost the ability to see my post analytics. I wanted to see which posts were the most popular, so I could get a sense of what resonated with people.

I realized that I could scrape my blog and then fine-tune GPT on the text to generate new posts in the same style. I did this and generated a new post, which I then sent to my blog subscribers. I asked them to click on the link to my blog and read the post, and I could track the clicks to see how many people I fooled into thinking it was a real post!

I got 34 clicks, which is pretty good considering I only have 75 subscribers at the moment. Here are the analytics:

I also included a link to a Google Form where they could submit their favorite post I’ve written so far. 13 people voted, and here are the results (I’m not sure how to embed it here).

I’m still learning what people find interesting, but I was happy to see that one of my favorite posts I’ve written so far (the one about hiking to the top of Mt. Fuji) was the most popular. I’m also happy to see that the fake post I generated and sent out got the second most votes!

I’ll continue to generate content and see what resonates, and I’ll keep iterating on my writing style. I’m also working on a few more ambitious projects that I’m excited to share soon.

Pretty cool! I can definitely notice the style is in the direction of my writing on 52weeks. Having the consistent structure in my input posts probably helped. At the very least, I can test this on new creative ideas I have to see a plausible approach as inspiration.